SuperCLUE Reasoning Leaderboard Surprises: Is ZTE an AI Company?}

ZTE, a major ICT firm, unexpectedly wins AI reasoning competitions, showcasing its deep AI capabilities and strategic shift into AI technology, challenging industry norms.

ZTE Corporation, a large tech enterprise with tens of thousands of employees, has officially entered the AI arena with its 40 years of ICT technology accumulation.

It's quite interesting that an information and communication company has clinched the top spot in an AI reasoning competition.

Recently, the 2025 May report of the SuperCLUE Chinese language model evaluation benchmark was released. This report assessed large models from OpenAI, Google, DeepSeek, ByteDance, and other domestic and international AI companies, publishing multiple leaderboards. The report shows that while overseas models generally outperform in overall ability, domestic models excel in reasoning tasks, with Doubao-1.5-thinking-pro-250415 and NebulaCoder-V6 from Nebula Cloud Model tying for first place with a score of 67.4.

SuperCLUE’s reasoning leaderboard deeply focuses on models’ logical thinking and problem-solving abilities, covering three core dimensions: mathematical reasoning, scientific reasoning, and code generation.

As expected from a professional competition, Doubao’s performance was impressive. However, NebulaCoder-V6 from Nebula Cloud Model, a veteran telecom company, is truly a dark horse. Not only did it top the reasoning leaderboard, but it also tied for second overall with DeepSeek-R1, earning a silver medal.

This achievement has sparked curiosity about ZTE—traditionally known for base stations and switches—since most top models come from internet companies, AI labs, or startups. A longstanding ICT manufacturer suddenly leading in AI reasoning tasks involving abstract thinking and logical chains is a surprising cross-industry breakthrough.

So, why is ZTE doing this? How can they perform so well? To answer these questions, TechXue and ZTE’s Chief Strategy & Ecosystem Expert Tu Jiashun, Nebula Cloud Model’s Chief Engineer Han Bingtao, and researcher Wu Qi discussed the close ties between communications and AI, the core technologies behind Nebula Cloud Model, and the future of this 40-year-old tech giant.

Why is ZTE heavily investing in AI?

At NVIDIA’s GTC conference in March, CEO Jensen Huang predicted, "AI can fundamentally change communications."

Tu Jiashun explained that this change is already happening. For example, 4G and 5G base stations have significantly increased in number, but the number of operators has not grown proportionally. The core reason is that modern communication networks heavily rely on automation, forming "autonomous networks" that greatly reduce the need for manual operation.

In the upcoming 6G era, this transformation will accelerate. NVIDIA’s Senior Vice President Ronnie Vasishta mentioned, "The countdown to 6G has begun. Basic research is shifting focus to the next-generation wireless communication, which will be AI-native—embedded in hardware and software. The next-generation network will connect trillions of smart devices, requiring AI support."

Tu Jiashun agrees, believing that 6G will incorporate AI from the design stage, integrating it into network architecture, protocols, and functions.

Recognizing this disruptive trend early, ZTE has made forward-looking AI investments. They have established multiple AI teams, including the Nebula Cloud Large Language Model and telecom industry-specific models, making AI a key strategic focus—covering infrastructure, data centers, and industry applications. Recently, their Co-Sight intelligent system topped the GAIA benchmark.

Today, ZTE’s AI is deeply integrated into their operations—whether on the network, computing, or terminal side. Their Nebula Cloud Model generates 1.5 billion tokens daily, with code synthesis reaching tens of millions of lines, and AI-driven code accounting for 30% of internal development.

From these efforts, ZTE has broken the stereotype of an ICT company, evolving into a tech enterprise driven by AI, with a development trajectory accelerating into the AI domain.

How did Nebula Cloud Model win the championship?

The victory in SuperCLUE’s reasoning leaderboard is attributed to the team’s efficient training and optimization strategies for large models. From pretraining to supervised fine-tuning and reinforcement learning, every step aims to maximize reasoning capabilities.

Pretraining: Building an efficient knowledge graph to lay a solid foundation

The core goal during pretraining is to improve overall performance, akin to general education in human learning.

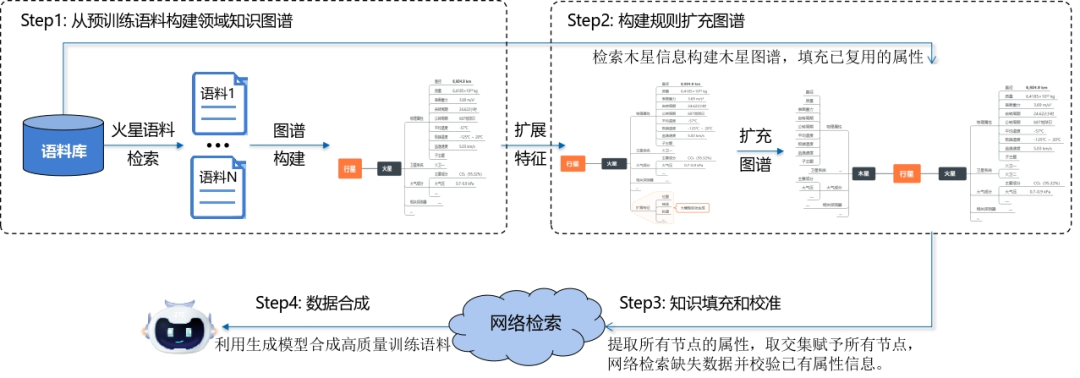

Data quality is crucial here. However, raw pretraining data often contains knowledge gaps and errors, leading to hallucinations in models. To address this, researchers developed a knowledge graph construction method called DASER (Domain-Aware Self-validating Entity Representation), which accurately identifies missing or incorrect knowledge in texts, then retrieves online data to fill gaps and correct errors, enhancing the model’s knowledge accuracy.

What is meant by "domain-shared attributes"? An example: Mars has abundant knowledge in existing corpora, but Jupiter’s data is incomplete. Traditional training would miss much Jupiter knowledge, causing hallucinations. DASER leverages shared properties within a domain—like planets sharing orbital and rotational periods—to automatically fill in missing attributes based on known shared features, using internet retrieval to supplement data.

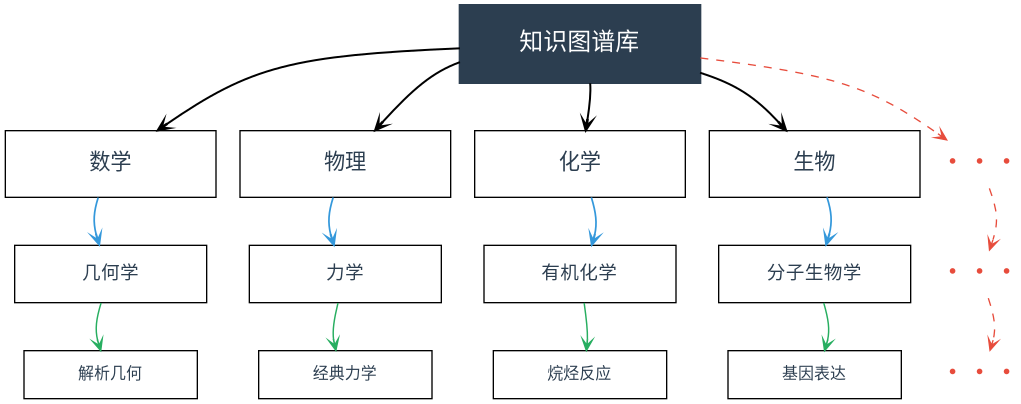

Using this method, Nebula Cloud Model built a comprehensive knowledge graph covering all disciplines in the national basic subject classification system. Model training efficiency and inference accuracy significantly improved. On the challenging private QA benchmark built by ZTE, accuracy increased from 61.93% to 66.48%.

Supervised fine-tuning: Critique learning + data flywheel for complex instructions

The goal of supervised fine-tuning (SFT) is to transform the general potential of pretrained models into domain-specific expertise, enabling understanding and execution of complex instructions—similar to higher education or vocational training.

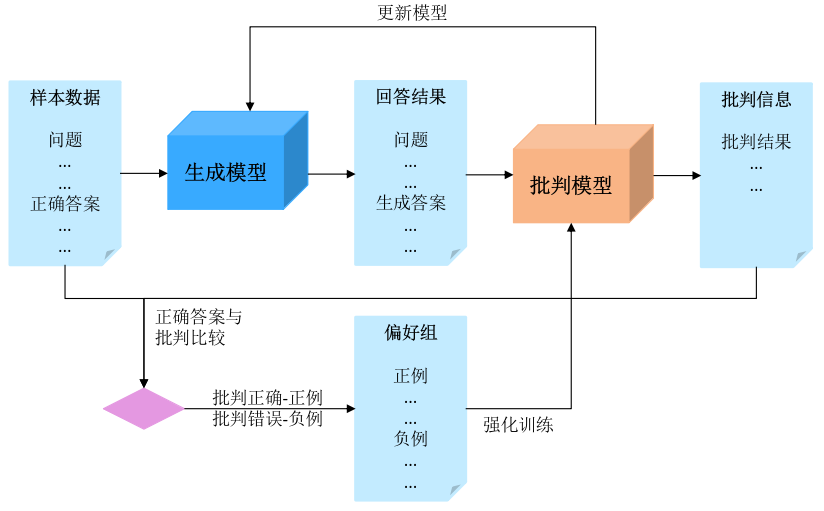

Researchers use two types of data: standard QA pairs for direct response training, and reasoning chain data that explicitly includes reasoning steps. They further employ Critique Learning (CL) to generate challenging samples, allowing models to critique and verify answers, creating a continuous "critique-reasoning" feedback loop.

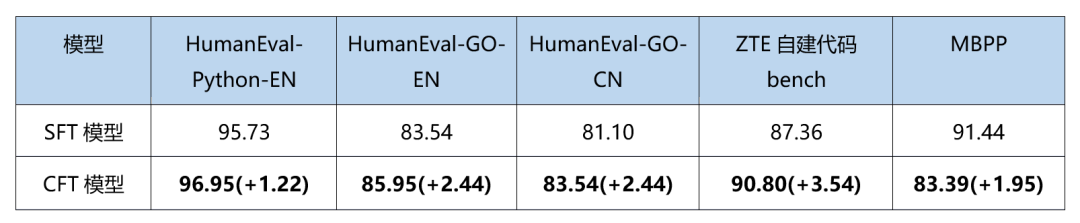

They found that CL data significantly improves reasoning accuracy, especially in math and coding tasks. The process involves generating initial answers, critiquing errors, producing corrected answers, and verifying correctness, forming a four-tuple training sample. Using only parts of this tuple (task description, error, critique) for training yields better results than using the full tuple.

Compared to pure reasoning chain data, Critique Fine-Tuning (CFT) with CL/PCL algorithms boosts accuracy in reasoning tasks, including math and code.

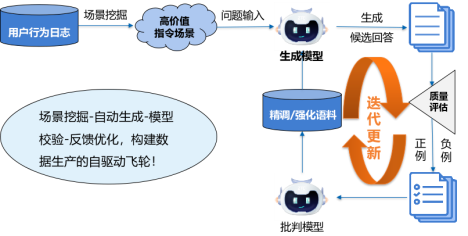

Additionally, to improve understanding of complex user instructions, ZTE constructs a data flywheel with four modules, many of which are automated, including scene mining and candidate answer generation. In the critical model verification module, they also use critique learning, creating a feedback loop that iteratively improves the model’s intent understanding.

Reinforcement Learning: Two-stage approach to improve accuracy and rigor

The goal of reinforcement learning (RL) is to optimize model behavior through environment feedback, similar to real-world work scenarios. Nebula Cloud Model employs a two-stage RL: first, global correction, then local refinement.

In the correction phase, they introduce Critique Reinforcement Learning (CRL), training on difficult STEM problems to iteratively improve accuracy. In the refinement phase, they address the issue that RL can reduce answer diversity—using fine-grained reward calculations for each token, leading to more reasonable answers and a 13% increase in human preference scores.

When models are fine-tuned with RL, answer diversity can decline, especially in code generation, where multiple correct solutions exist. Over-penalizing certain approaches can cause the model to avoid valid solutions, leading to a collapse of capabilities despite large-scale reinforcement data.

To address this, they use offline rejection sampling to generate minimal correction samples and refine reward calculations at a granular level, significantly reducing hallucinations and improving alignment with human preferences.

Seamless Transition from ICT to AI

When traditional ICT giants enter the AI field, they face "cross-industry" challenges or a seamless transition? The answer might be the latter.

This is because AI and ICT are more similar than they seem. Both revolve around data processing, exchange, and storage; both are complex, large-scale systems requiring efficient coordination.

Specifically, ICT involves vast networks of nodes, while AI requires chips, servers, storage, switches, and data centers—forming an efficient, green foundation. These systems need to optimize both locally and globally, demanding full-stack engineering, system practice, and optimization—strengths of ZTE and key to their strategic focus on "Smart Computing".

Moreover, ZTE has unique advantages in AI development. AI is a multidisciplinary engineering science, and its innovation depends heavily on engineering experience like parameter tuning, operator fusion, and algorithm optimization—areas where many early entrants struggle.

From ZTE’s perspective, they possess system engineering capabilities, stronger networking than pure IT companies, and hardware strength compared to pure model companies. Overall, ZTE is well-positioned to build the entire AI industry chain, from hardware to software, models, and industry applications.

They also have a large ecosystem of products being "AI-ified." If fully AI-enabled, the market potential is huge, enabling rapid iteration across scenarios and data feedback loops.

When traditional ICT giants embrace AI, what kind of industry reactions will occur? The answer likely lies in ZTE’s next steps.