Sebastian Raschka's Book Free Access! "30 Essential Questions on Machine Learning and AI" Suitable for Beginners and Experts}

Sebastian Raschka, author of 'Python Machine Learning,' offers free access to all 30 chapters of his book '30 Questions on Machine Learning & AI,' aiding learners at all levels.

Renowned AI tech blogger and author of Python Machine Learning, Sebastian Raschka, is back with a generous offer! During the summer internship and interview season, he has made all 30 chapters of his book "30 Questions on Machine Learning & AI" freely available. He hopes to help everyone and wishes good luck to those preparing for interviews.

This book originally costs $49.99 (about 358 RMB) for the hardcover + e-book, and $39.9 (about 286 RMB) for the e-book alone.

Today, the field of machine learning and AI is developing at an unprecedented speed. Researchers and practitioners often find themselves exhausted trying to keep up with new concepts and technologies.

This book provides a fragmentary yet comprehensive knowledge summary—covering topics from beginner to expert across multiple fields. Even experienced ML researchers and practitioners can find new content to enrich their skill set.

In response to a question in the comments—"Was this book written with AI?"—Sebastian clarified that of course not, as it would violate his personal ethics. Interestingly, most of the content was written before ChatGPT’s first release in November 2022, initially published on LeanPub, and later by No Starch Press in 2024. The book may have been part of ChatGPT’s training data.

Sebastian also linked to a January 2023 update about the book, where he added new content including stateless and stateful training, proper evaluation metrics, and limited labeled data.

This book has received praise from many readers and industry peers.

Chip Huyen, author of Designing Machine Learning Systems, states, "Sebastian uniquely combines academic depth, engineering agility, and the ability to simplify complex topics. He can deeply explore any theoretical subject, verify new ideas through experiments, and explain them clearly in simple language. If you’re starting your machine learning journey, this book is your guide."

Ronald T. Kneusel, author of How AI Works, says Sebastian’s "Machine Learning Q & AI" is a one-stop guide covering key AI topics often missing from introductory courses. If you’ve entered the AI world through deep neural networks, this book will help you locate and understand the next stage.

Next, let’s see what topics this book covers.

Book Overview

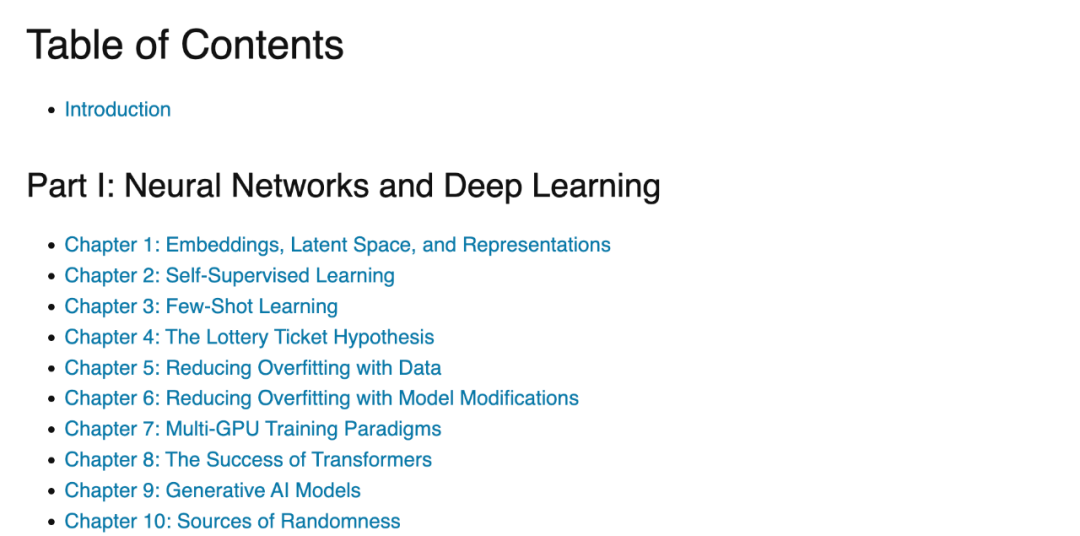

The book consists of 5 parts and 30 chapters.

The first part covers Neural Networks and Machine Learning, including:

Chapter 1: Embeddings, Latent Spaces, and Representations

Deep analysis of embeddings, latent vectors, and representations, explaining how these concepts help ML models encode information.

Chapter 2: Self-supervised Learning

Focuses on self-supervised methods that enable neural networks to leverage large unlabeled datasets in a supervised manner.

Chapter 3: Few-shot Learning

Introduces supervised techniques designed for small datasets—few-shot learning.

Chapter 4: Lottery Hypothesis

Explores the theory that randomly initialized neural networks contain smaller, effective sub-networks.

Chapter 5: Reducing Overfitting with Data

Discusses data augmentation and semi-supervised learning as solutions to overfitting issues.

Chapter 6: Reducing Overfitting via Model Modification

Analyzes regularization, model simplification, and ensemble methods to combat overfitting.

Chapter 7: Multi-GPU Training Paradigms

Details data parallelism and model parallelism for accelerated training.

Chapter 8: Success of Transformers

Explains why Transformer architectures became dominant, highlighting attention mechanisms, parallelization, and high parameter counts.

Chapter 9: Generative AI Models

Provides a comprehensive overview of models that generate images, text, and audio, analyzing their strengths and weaknesses.

Chapter 10: Sources of Randomness

Analyzes factors causing variability in training and inference, including accidental and intentional sources of randomness.

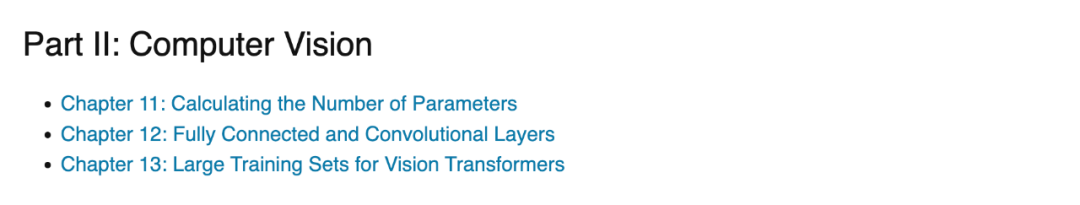

The second part covers Computer Vision, including:

Chapter 11: Computing Parameters

Details how to calculate parameters in CNNs, crucial for assessing storage and memory needs.

Chapter 12: Fully Connected and Convolutional Layers

Discusses when convolutional layers can replace fully connected layers, important for hardware optimization and model simplification.

Chapter 13: Large Training Sets for ViT

Explores why Vision Transformers require larger datasets compared to traditional CNNs.

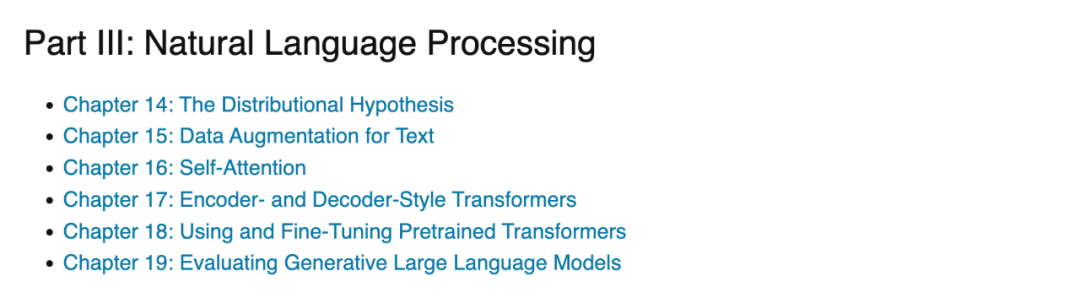

The third part focuses on Natural Language Processing, including:

Chapter 14: Distribution Hypothesis

Deep dive into the distribution hypothesis, which states that words appearing in similar contexts tend to have similar meanings—a key insight for training ML models.

Chapter 15: Text Data Augmentation

Focuses on techniques to artificially expand datasets, improving model performance.

Chapter 16: Self-attention

Explains self-attention mechanisms, core to modern large language models, enabling each input segment to relate to others.

Chapter 17: Encoder-Decoder Transformers

Compares encoder and decoder architectures, clarifying their roles in various NLP tasks.

Chapter 18: Fine-tuning Pretrained Transformers

Details methods for adapting large pretrained models, analyzing their advantages and limitations.

Chapter 19: Evaluating Generative Language Models

Lists metrics like perplexity, BLEU, ROUGE, and BERTScore for assessing language models.

The fourth part discusses Production and Deployment, including:

Chapter 20: Stateless and Stateful Training

Distinguishes between deployment methods, explaining their use cases in real-time inference and continuous learning.

Chapter 21: Data-Centric AI

Explores optimizing datasets rather than models, contrasting with traditional model-centric approaches.

Chapter 22: Accelerating Inference

Introduces techniques like quantization and knowledge distillation to speed up inference without sacrificing accuracy.

Chapter 23: Data Distribution Shift

Analyzes challenges when deployed models face data distribution changes, including covariate shift, concept drift, label shift, and domain shift.

The fifth part covers Performance Prediction and Model Evaluation, including:

Chapter 24: Poisson and Ordered Regression

Explains differences between Poisson regression for count data and ordered regression for ordinal data, without assuming equal class spacing.

Chapter 25: Confidence Intervals

Discusses methods for constructing confidence intervals for classifiers, including normal approximation, bootstrap, and multiple seed retraining.

Chapter 26: Confidence Intervals vs. Conformal Prediction

Distinguishes between the two, with confidence intervals estimating parameter uncertainty and conformal prediction providing coverage guarantees.

Chapter 27: Proper Evaluation Metrics

Highlights the core properties of good metrics and evaluates common loss functions like MSE and cross-entropy.

Chapter 28: The Role of 'k' in k-Fold Cross-Validation

Analyzes the trade-offs in choosing different 'k' values for cross-validation.

Chapter 29: Distribution Shift Between Training and Testing

Proposes solutions for performance issues caused by distribution differences, including adversarial validation techniques.

Chapter 30: Limited Labeled Data

Offers techniques like data annotation, self-sampling, transfer learning, active learning, and multimodal learning to address small-sample challenges.