ICCV 2025 | UniOcc: Unified Dataset and Benchmark Platform for Occupancy Prediction and Reasoning in Autonomous Driving}

UniOcc, a comprehensive benchmark for 3D occupancy forecasting in autonomous driving, integrates multi-source data, introduces voxel-level motion flow, and supports multi-vehicle collaboration, advancing perception research.

Researchers from UC Riverside, University of Michigan, University of Wisconsin-Madison, and Texas A&M University have introduced UniOcc, the first unified benchmark framework for semantic occupancy grid construction and prediction in autonomous driving, presented at ICCV 2025.

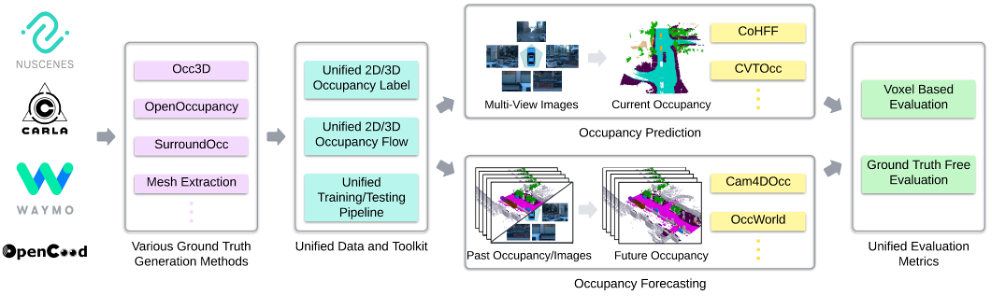

UniOcc combines real-world datasets (nuScenes, Waymo) and simulation environments (CARLA, OpenCOOD), using a unified voxel format and semantic labels. It uniquely introduces voxel-level forward and backward motion flow annotations and supports multi-vehicle cooperative occupancy prediction and reasoning. To overcome the limitations of pseudo-label evaluation, UniOcc designs multiple ground-truth-free metrics to assess object shape plausibility and temporal consistency. It demonstrates significant advantages in motion flow utilization, cross-domain generalization, and collaborative prediction across multiple state-of-the-art models.

- Paper Title: UniOcc: A Unified Benchmark for Occupancy Forecasting and Prediction in Autonomous Driving

- Project Homepage: https://uniocc.github.io/

- Code Open Source: https://github.com/tasl-lab/UniOcc

- Dataset Download:

- Hugging Face: https://huggingface.co/datasets/tasl-lab/uniocc

- Google Drive: https://drive.google.com/drive/folders/18TSklDPPW1IwXvfTb6DtSNLhVud5-8Pw?usp=sharing

- Baidu Cloud: https://pan.baidu.com/s/17Pk2ni8BwwU4T2fRmVROeA?pwd=kdfj (Password: kdfj)

Background and Challenges

3D occupancy grids are crucial for autonomous driving perception, aiming to construct or predict 3D occupancy from sensor data. Current challenges include:

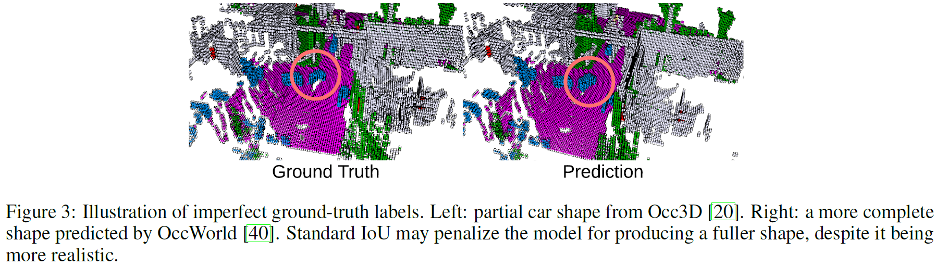

- Pseudo-label defects: Main datasets like nuScenes and Waymo lack real occupancy labels, relying on LiDAR heuristic pseudo-labels that only cover visible surfaces, leading to incomplete shape modeling and poor results. Figure 3 shows the comparison between Occ3D pseudo-labels and model predictions.

- Data fragmentation: Existing methods are limited to single data sources with inconsistent formats, sampling rates, and annotations, requiring separate adaptation for training and evaluation. A unified format and toolchain are urgently needed to improve cross-dataset generalization.

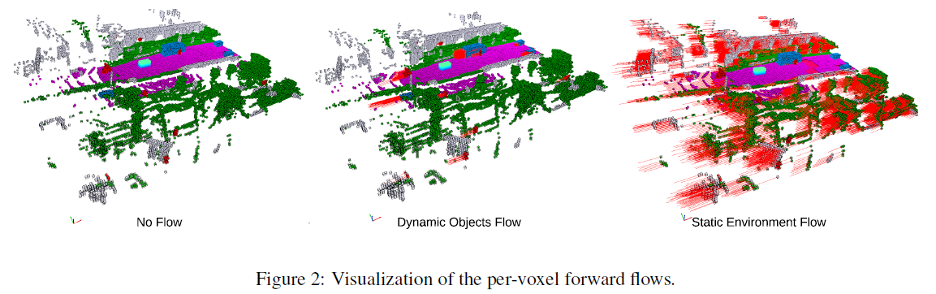

- Missing dynamic information: Current 3D occupancy labels often lack motion data. UniOcc introduces voxel-level motion flow annotations, capturing translation and rotation, enhancing dynamic scene modeling (see below).

Collaborative Driving: Although multi-vehicle perception is a cutting-edge direction, there was a lack of datasets supporting multi-vehicle occupancy prediction. UniOcc extends OpenCOOD to multi-vehicle scenarios, becoming the first open benchmark supporting multi-vehicle collaborative occupancy prediction.

Four Key Innovations of UniOcc

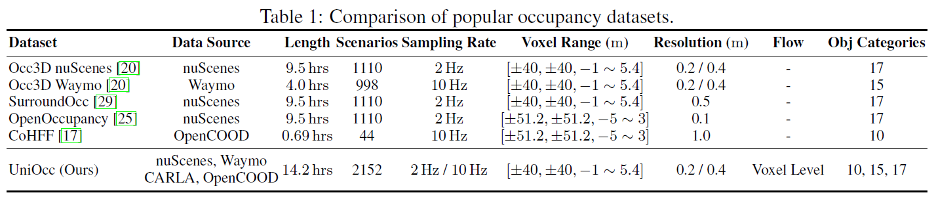

Unified multi-source data processing: UniOcc aggregates data from real scenes (nuScenes, Waymo) and simulations (CARLA, OpenCOOD), providing standardized data preprocessing and loaders. It’s the first framework integrating multiple occupancy datasets, enabling cross-domain training and evaluation (Table 1).

Voxel-level motion flow annotations: UniOcc provides forward and backward voxel-level velocity vectors, capturing translation and rotation of objects. This is the first such innovation in occupancy prediction, helping models better understand scene dynamics (Figure 2).

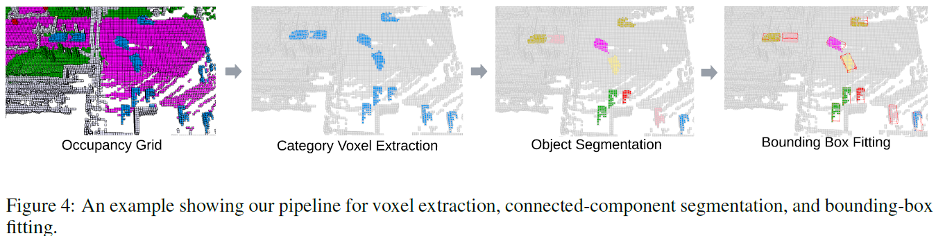

Pseudo-ground-truth-free evaluation metrics: UniOcc proposes metrics and tools that do not rely on perfect labels, using high-GMM models to assess shape plausibility and temporal consistency of predictions (Figure 4).

Supporting collaborative prediction: Extending OpenCOOD, UniOcc covers multi-vehicle perception scenarios, enabling research on sensor fusion across vehicles.

Experimental Validation

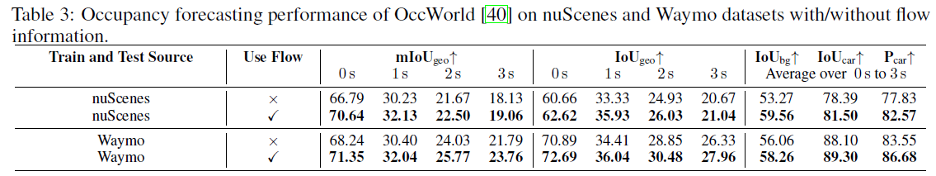

Incorporating motion flow information: When UniOcc’s voxel motion flow is integrated into models like OccWorld, prediction performance improves significantly. Table 3 shows increased mIoU scores on nuScenes and Waymo datasets.

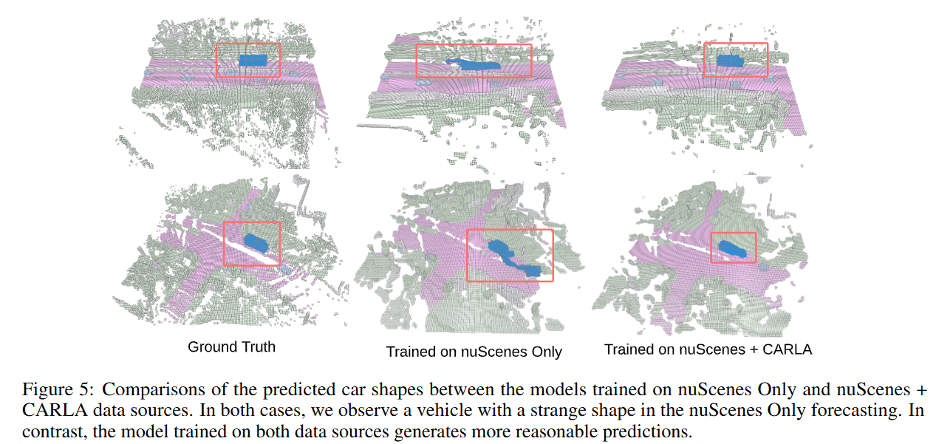

Multi-source joint training: Training on multi-source data enhances cross-domain generalization. Table 4 shows that joint training on nuScenes and CARLA outperforms single-source training, with improved realism in generated objects (Figure 5).

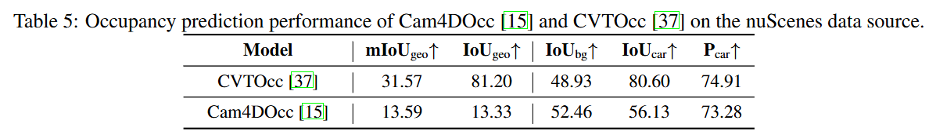

Evaluating existing occupancy prediction models: Using UniOcc, the quality of models like Cam4DOcc and CVTOcc can be measured, and incomplete predictions can be categorized (Figure 3).

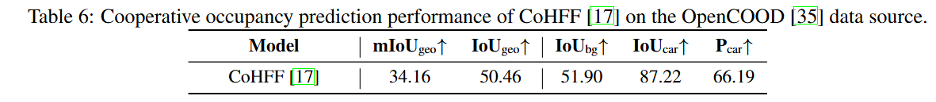

Collaborative prediction benefits: In multi-vehicle scenarios, sharing sensor data improves perception. For example, CoHFF achieves 87.22% IoU on the Car category in OpenCOOD, demonstrating the advantage of collaborative sensing.

Open-source and application prospects: UniOcc’s unified framework supports various occupancy tasks, including single-frame, multi-frame, multi-vehicle prediction, and dynamic object tracking. It simplifies research and promotes cross-domain generalization.

In the future, UniOcc’s unified data format and flow annotations will lay the foundation for training and evaluating multimodal and language models in autonomous driving, fostering innovation in semantic occupancy prediction.