Did 'Hallucination' Originate from Karpathy a Decade Ago? The Naming Maestro Who Popularized Many AI Concepts}

Karpathy, a renowned AI expert, coined the term 'hallucination' for AI errors. His influence extends to many key AI concepts, shaping industry terminology and understanding.

Naming King Karpathy.

Unexpectedly, the term 'hallucination' was actually coined by AI expert Andrej Karpathy.

Recently, a netizen discovered in a new book, The Thinking Machine, a passage describing: "Karpathy admits his neural networks have limitations: they only mimic language without truly understanding it. When they encounter concepts they don't understand, they 'proudly' generate meaningless content. Karpathy calls these errors 'hallucinations.'"

This post was also seen by Karpathy himself, who commented: "I believe this is true. I used this term in my 2015 blog post, 'The Unreasonable Effectiveness of RNNs.' To the best of my memory, I also 'hallucinated' this term myself."

According to Karpathy, we found this blog, which indeed contains references to 'hallucination.' He pointed out that models can 'hallucinate' URLs and math problems. It wasn't until the emergence of ChatGPT in 2022 that the term truly gained popularity and became a hot research area.

But to know whether anyone used 'hallucination' or 'hallucinate' to describe similar phenomena before 2015, one might need to review many papers.

This interesting origin story once again proves that Karpathy is a true naming master in the AI circle. He proposed concepts like 'Software 2.0' in 2017, 'Software 3.0' in 2025, 'Atmospheric Programming,' and 'Bacterial Programming.' Although 'Context Engineering' wasn't his term, it gained popularity after his repost and commentary. No other AI expert's influence in promoting new concepts can compare to Karpathy's.

In scientific research, don't underestimate the power of naming. As Gemini summarized, naming is the 'foundational act of creating knowledge.' Precise naming is like an 'address' for classification and a 'stable target' for global scientists to focus on.

Over the past decade, the concepts named by Karpathy have gained increasing attention, which is also an important way he has contributed to science.

Software 2.0, Software 3.0

Back in 2017, Karpathy proposed the term 'Software 2.0.'

In this article, Karpathy explained that Software 1.0 was the classic stack written in Python, C++, etc., composed of explicit instructions by programmers. These instructions guide the computer step by step.

In contrast, Software 2.0 is written in more abstract, less human-friendly languages, like neural network weights. Humans don't write this code directly because the number of parameters is huge—millions in a typical network—and manually tuning weights is nearly impossible.

To clarify, in Software 1.0, source code (like .cpp files) is compiled into executables to perform tasks. In Software 2.0, source code includes datasets defining behaviors and neural network architectures, with training essentially compiling data into a trained model.

In summary, Software 1.0 is the classic coding era, requiring precise understanding of syntax and logic. Software 2.0 is the neural network era, where models are trained via data rather than explicit rules.

Interestingly, Karpathy also introduced a new concept: Software 3.0, the era of prompt engineering. Developers and even non-developers can describe what they want in plain English (e.g., build a website to track daily tasks), and AI generates the code.

Source: https://www.latent.space/p/s3

Karpathy emphasizes several key features of Software 3.0:

- LLMs as computing platforms: likening large language models to infrastructure like electricity. Training large models requires huge upfront investment, like building a power grid; using them via APIs is pay-as-you-go, emphasizing scalability and accessibility.

- Autonomous sliders: Borrowing from Tesla's autonomous driving experience, this concept allows users to adjust AI control levels—from minimal assistance (e.g., code suggestions) to full autonomy (e.g., generating entire applications), providing flexibility based on task and preference.

Vibe Coding

Vibe Coding, introduced by Karpathy this February, encourages developers to forget about code and enter a creative 'vibe' state. Simply put, it involves describing needs to an LLM, then accepting its output entirely.

Source: https://x.com/karpathy/status/1886192184808149383

As Karpathy states, in vibe coding, you immerse yourself in the vibe, follow your intuition, and forget you're even coding. The power comes from the AI's strength, making it possible to do almost everything with minimal effort—just ask and accept.

Even with errors, you can just paste the error message, no need to explain; the model can fix it itself. For bugs unfixable by the model, a few random edits can make the problem disappear.

This approach is no longer traditional programming—just describe your idea, run the program, copy-paste, and it will roughly work. It echoes the programmer's famous saying: "Talk is cheap. Show me the code."

The phrase originated in August 2000, when Linux creator Linus Torvalds replied on the Linux-kernel mailing list: "Talk is cheap. Show me the code." Now, it has evolved into "Code is cheap, show me the prompt (talk)."

Bacterial Programming

Bacterial Programming involves writing code like bacteria—small, modular, self-contained, and easily transferable, inspired by bacterial genomes. It emphasizes minimalism, modularity, and portability, enabling rapid prototyping.

Karpathy suggests combining the strengths of both approaches: build a robust monorepo for complex systems while maximizing the use of bacterial-like code snippets for flexibility and reusability.

One Forward, Boosting Context Engineering

While prompt engineering was popular in AI circles, context engineering has gained recent attention after Karpathy's endorsement, rapidly becoming a trending topic with over 2.2 million views.

Source: https://x.com/karpathy/status/1937902205765607626

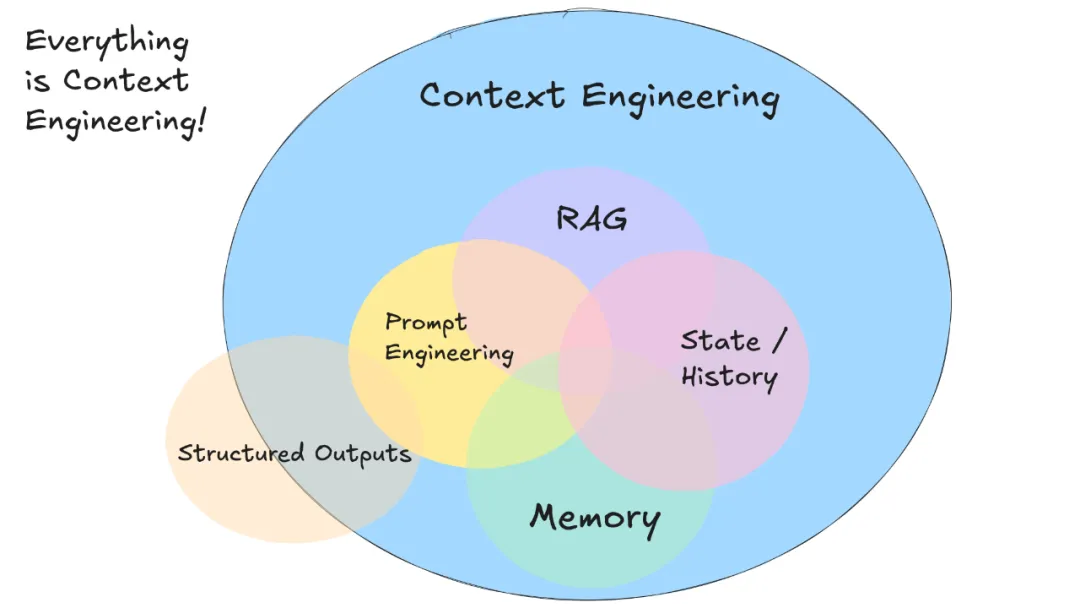

Many people are confused about the difference between prompt engineering and context engineering. A blog by LangChain explained that prompt engineering is a subset of context engineering, which involves providing structured, comprehensive context information to AI systems.

Interested readers can refer to articles like 'Prompt Engineering, RAG, and the Rise of Context Engineering' and 'Trending Now: Context Engineering Replaces Prompt Engineering as the New Hotspot'.

Beyond these concepts, Karpathy's tweets also highlight issues gaining industry attention, such as the importance of document readability for AI processing, advocating for formats like Markdown to optimize for AI understanding rather than just humans.

Do you remember which concept Karpathy proposed or popularized? Feel free to share in the comments.