Can Complex Spatial Commands Be Understood Instantly? RoboRefer Enables Robots to Comprehend Reasoning Spaces and Act Precisely in Open Worlds!}

RoboRefer, a multimodal model from Beijing institutions, achieves advanced spatial reasoning, enabling robots to understand and execute complex commands accurately in real-world environments.

The main authors are from Beijing University of Aeronautics and Astronautics, Peking University, and Beijing Academy of Artificial Intelligence. The first author is Zhou En-shen, a master's student at BUAA, focusing on embodied intelligence and multimodal large models. The corresponding authors are Chicheng Chi from Beijing Academy of AI and Sheng Lu and Zhang Shanghang from Beijing University of Aeronautics and Astronautics and Peking University, respectively.

Robots moving from labs into the real world face far more complex environments. Real settings are often chaotic, with diverse objects and dynamic changes, unlike the clean, controlled conditions of labs.

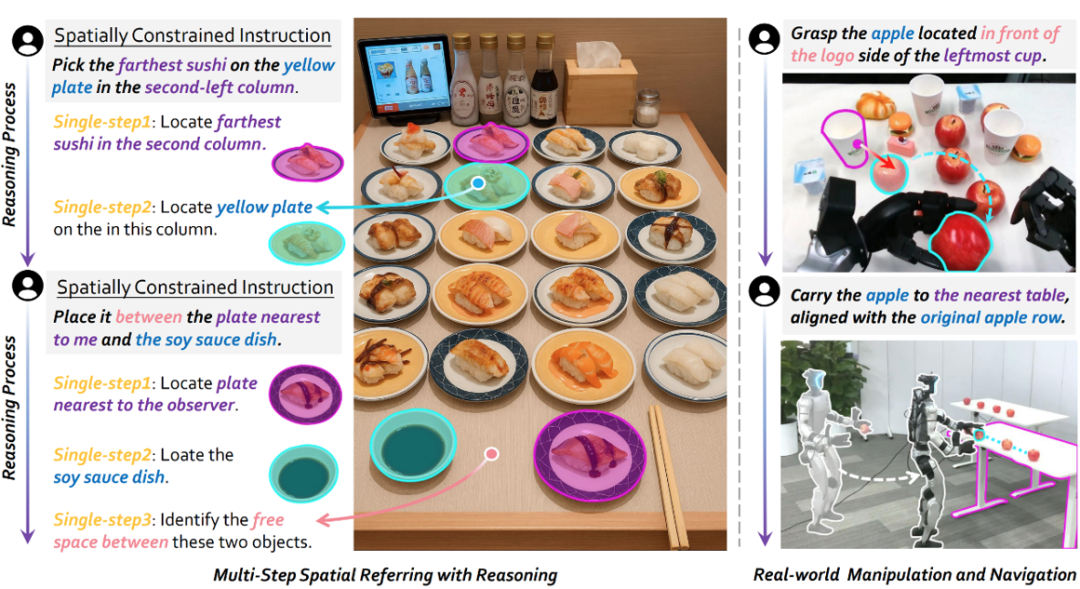

Imagine you’re in a restaurant with a service robot. You say, “Place the yellow sushi plate at the far end of the second row into the empty space between the nearest sushi and soy sauce dish.” (left) Or you want it to “Pick up the apple facing the logo on the left and place it on the nearest table, aligning it with the previous apple at equal intervals.” (right)

These sound like familiar commands, but they are a classic spatial referring task. Simply put, it involves understanding spatial relationships like “farthest,” “second row,” or “equidistant” to identify objects, place items, or navigate.

Though seemingly simple, this task is challenging. Even the most advanced multimodal models still struggle to accurately interpret complex 3D scenes and dynamically reason about correct interaction points. This is because spatial referring involves two key challenges:

- Single-step spatial understanding: The robot must first perceive the world, recognizing objects’ spatial attributes (position, orientation) and relations (distance, direction). This is the foundation, and most current research remains at this level.

- Multi-step spatial reasoning: The real challenge is handling sequences of complex spatial constraints, requiring the robot to understand, reason step-by-step, and adaptively respond to various spatial combinations in open environments. This capability is crucial but still underexplored and underestimated.

To address this, Beijing University of Aeronautics and Astronautics, Peking University, and Beijing Academy of AI jointly developed RoboRefer, a multimodal model with 3D spatial understanding and reasoning abilities. It not only achieves precise comprehension through full parameter fine-tuning (SFT) but also significantly enhances reasoning and generalization via reinforcement learning fine-tuning (RFT), enabling accurate spatial referring in open worlds.

- Paper link: https://arxiv.org/pdf/2506.04308

- Paper title: RoboRefer: Towards Spatial Referring with Reasoning in Vision-Language Models for Robotics

- Project homepage: https://zhoues.github.io/RoboRefer

- Code repository: https://github.com/Zhoues/RoboRefer

- Data link: https://huggingface.co/datasets/JingkunAn/RefSpatial

- Evaluation link: https://huggingface.co/datasets/BAAI/RefSpatial-Bench

SFT-trained RoboRefer achieves an 89.6% average success rate in spatial understanding tasks, setting a new benchmark. On the challenging RefSpatial-Bench dataset, RFT-trained RoboRefer outperforms all others, surpassing Gemini-2.5-Pro by 17.4% in average accuracy.

More experimental results, visualizations, and real-world demo videos of complex scenes can be found in the paper and on the project homepage!